J74 V-Module

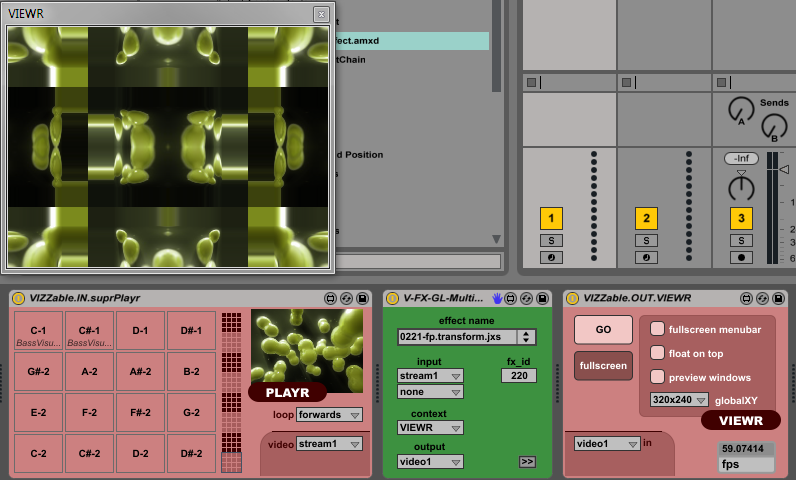

V-Module is a collection of Max for Live devices for real-time video content creation and processing within Ableton Live. It works on top of Live and is essentially a Live-like interface to Jitter functionalities in Max (along with a few creations of mine).IMPORTANT: Currently only Live 9.7.x and Max 7.3.x are supported. V-Module does not work under Live 10 and Max 8 yet.

Here you can download V-Module latest version. Please read the included readme file ("How to Install V-Module.txt") first, have a look to the demonstration Live sets in the Live Pack and to the video tutorials available om my vimeo and youtube pages. This way you should be able to install and to quickly use the environment.

Note: if you are planning to watch the tutorials please be aware that some devices may have been modified and/or renamed through the various versions of V-Module (sorry about that, but at the end that's the nature of experimental work!). Also refer to the PDF documentation if you need more information on the devices.

As a M4L toolset, V-Module brings things together:

- Jitter's advanced real-time video manipulation

- Jitter's interface to 3D creation (OpenGL, shaders)

- Live automation (clip envelopes, synchronization)

- Parameter automation recording

- Control surface mapping

It is also open and extensible (i.e. it interfaces natively with another great M4L tool for video handling, VIZZable, and through the included documentation it allows you to write your own devices and integrate them in its workflow).

Note: if you are planning to watch the tutorials please be aware that some devices may have been modified and/or renamed through the various versions of V-Module (sorry about that, but at the end that's the nature of experimental work!). Also refer to the PDF documentation if you need more information on the devices.

What can you do with V-Module?

I love improvisation and, at the same time electronic / computer-based music. Many Ableton Live users will recognize this duality as their own. On the other side I am a visual person, and I always see (seek) a connection between music, shapes color and movement. This personal view and the technical possibility for very thight A/V synchronization are the reasons for V-Module existence.As a M4L toolset, V-Module brings things together:

- Jitter's advanced real-time video manipulation

- Jitter's interface to 3D creation (OpenGL, shaders)

- Live automation (clip envelopes, synchronization)

- Parameter automation recording

- Control surface mapping

It is also open and extensible (i.e. it interfaces natively with another great M4L tool for video handling, VIZZable, and through the included documentation it allows you to write your own devices and integrate them in its workflow).

Modularity

V-Module is composed by a set of modules: devices are relatively compact and perform as atomic building blocks in a flexible chain. The point is: devices can be combined in chains (within Live) without needing to create a new patcher or modify the existing ones in order to interconnect them. Chains can be modified easily, at any time and in real-time. This offers an improvisation environment, where you can play with things in creative ways, without bothering any more (at least ideally) about any programming layer underneath.A community full of ideas

A small, growing and passionate community has gathered around the same idea (a modular environment for real-time A/V creation and manipulation within Ableton Live). Both V-Module and Zeal's VIZZable projects are part of this initiative. If you are interested you can follow our activities at the JitterinMax4Live Google group. Some of the video material we produced can be viewed at the JitterinMax4Live Vimeo page. You can also find V-Module on GitHub here.V-Module use and Intellectual Property

V-Module is freely distributed under the Creative Common “No Derivative Works” licence. It is a genuine, self-developed tool, with no particular strings attached: you are completely free to use and modify it as a tool for personal use (media production or performance), even for your professional activities. You are NOT allowed, though, to take any part of it (concepts, algorithms, devices or code) and offer it / commercialize it as an “original” product of your own.V-Module - Release notes:

Tips for optimizing performance (all platforms):- Set Ableton Live audio buffer size to the lowest possible value for your system and audio applications (Preferences -> Audio -> Hardware Setup)

- If you have a GPU (nVidia, AMD/ATI, etc) use GPU based effects (like the V-FX-GL-Clab.amxd) and output (like the V-GL-RenderMixer4RGB mixer).

- If you use video clips playback use either Photo JPEG or Motion JPEG codecs, with MOV or AVI containers. Quality of 75% for the encoding is fine.

- If possible, dedicate a machine to video processing and a separate machine to audio/music. This dramatically improves performance and stability (less interrupts, optimized chains, less CPU and GPU contention). You can of course couple the two environments, for instance by MIDI sync or by offering in real-time audio to the video machine for processing (things like: envelope following of audio by video or frequency spectrum analysis used by video to generate shapes in real-time).

Tips for optimizing performance (Mac users only):

- Mac users can limit video resource contention between Live's GUI and M4L visual applications, by setting the Live GUI in the background.

Doing Video in Max for Live, in fact, means Live's GUI and Max for Live will fight for the same resource: OpenGL processing power from the GPU.

This limitation is not applicable to Windows users, as OpenGL is not used by Live's GUI (the GUI uses DirectX instead, leaving OpenGL free to M4L).

Supported/Tested versions:

- Live 9 compatible version (experimental release 0.9.1). For Live 9.x or higher + Max 6.1.x or Max 7.x

- Legacy Live 8 version (*not supported* but available in the download): release 0.8.1 for Live 8.3 or higher + Max 5.1.9 (same download package)

- Full functionality currently available only in the 32bits version.

Specific version notes:

Ver 0.9.1

- Compatibility for Live 9

- Many device rewritten (and renamed), largely using Java and Javascript

- Additional shaders

- New Particle simulations in Java

- Extensions to V-Sketch

- Support for Materials (as from Max 6.x)

- Support for VIEWR render context for VIZZable compatibility

Compatibility with VIZZable 2.x

Ver 0.8.1

- New V-SimPlayLibrary, with more compact and powerful toolset for video clip playback

- New Audio Analisys devices (V-AuScope-xxx), with much tighter audio signal recognition and Live.API triggering

- Syphon-enabled mixer (MAC OS only) updated

- Some fixes to devices and installation pack

Ver 0.8

- Added a Syphon-enabled V-Mixer (MAX OS only), V-GL-RenderMixer4RGB-Syphon.amxd to any Syphon client (servername "MixerOut").

- Control devices for OSC communication (OSC2MIDI.amxd, V-MIDI2OSC.amxd)

- An efficient folder video clip player (SimPlayList.amxd)

- A new wrapper for all V-Module 250+ shaders (V-FX-GL-Clab.amxd).

- A new wrapper for complex 3D scene sketching (V-GSketchListX.amxd) with support for custom 3D scripts.

- A new wrapper for materials shaders for 3D object (V-GPreShader.amxd).

- Devices for OSC communication between V-Module and vvvv (V-Matrix2VVVV_snd.amxd, V-MIDI2VVVV_snd.amxd)

- Possibility for a secondary/auxiliary window (V-GL-RenderMixer4RGB-FdbckAux.amxd + V-GL-RenderAuxMonitor.amxd).

- 3D camera rewritten in java (old version still available with "-o" name suffix)

- Support for FSAA (antialiasing) in all mixers.

- General code clean up and optimization.

Syphon Support (for MAC OS)

Note about 3D Scenes & Syphon

Syphon works out of the box only with video feeds (like video clips or camera grabs, which indeed are flat 2D images, or better: "textures"). It does not work directly with 3D objects in space. For instance the V-Module example1 (in V-Module installation) is indeed no video clip and you won't be able to get it into Syphon, directly.

Anyway, with some little work, it is possible to get 3D stuff into Syphon. Essentially you need set up a process which captures the 3D stuff into a texture.

In V-Module you can do that quite easily from the Syphon-enabled V-Mixer (and V-Render) devices. If you expand these devices (click on the >> little button on the bottom/right) you should see a couple of big toggles called Capture and AuxVideo.

Activate them. This will activate full scene 3D capture (at the cost of some GPU activity and possibly frame rate, of course). On the menu under the toggles specified above ("send aux output to") select a video stream. This is where the capture is being sent, indeed a 2D image (on the GPU called texture).

You then need to pick this frames up into a texture. You can do this in V-Module adding a "V-GTexture" device to your project chain. Once you dropped it you need to select a "texture name" on the V-GTexture top menu. On the same device second menu select the same video stream you selected at the "send aux output to" menu before on the V-Mixer/V-Render.

It should now offer the scene to Syphon.

Ver 0.7.2

- All V-FX-GL effects rewritten with more efficient chaining (pure GPU - V-Module mixer required to work).

- 3D Tools for morphing OpenGL vertexes and objects (vertex morphing):

- 3D Tools for movement/trajectory recording and editing (V-GMoveXXXX3D devices)

- 3D Tools for OBJ file to OpenGL matrix conversion: V-GSnapObj, V-GObj2Matrix, V-G-WriteMatrix, V-GMatrix

- New device type: V-OpenGL-Processor for “post-processing”-like effects on top of 3D scenes/objects (based on OpenGL shaders)

- Tools for recording of 2D and 3D only scenes: V-GL-RenderOut, V-GL-RenderGrabOut

Ver 0.7

- New V-Audio Instruments for playback of clips with both video and audio (through Live's mixer): V-SimAudioPlay, V-SliceAudioBeat, V-VideoAudioRack

- New V-SimAudioLooper an V-SimLooper for Live tempo warp-looping of video clips (respectively with and without audio feed)

- New V-FX-FFWrapper for FreeFrame plugins support

- All previously available V-Instruments (MIDI devices) for QT playback do not spill audio "outside" of Live

- New V-AuBeat based on external op.beatitude~ for Live tempo settings from real-time audio input

- New V-AuBeat2CVEnvelope based on external op.beatitude~ for very tight CV envelope generation from real-time audio input

- All video input devices save file (clips/folder) as part of the liveset

- V-ObjShape expanded for advanced texturing and multi rendering (cloning). It also saves the file as part of the liveset

- New V-ZoomPlay added (for picture zooming)

- Various enhancements to V-GMove3DW devices (3D camera movements) including a toggle for 2d/3d view switch

Ver 0.6

- V-SlabGen instrument introduced for generation of procedural visuals (such as fractals) based on custom OpenGL shaders.

- V-GTexture introduced for texture operations, such as video-to-texture (texture layer of a 3D object) and rendering to texture.

- V-GMulti introduced for efficient cloning of a 3D object, for very large number of 3D clones in space

- Added a mixer version with OpenGL based internal 3D video feedback.

- Added a large number of OpenGL processing effects for density, proximity, morphing applications

- Added a number of V-AuScope (audio) processing effects for tighter audio to video manipulation.

- Added a range of OpenGL coordinate formula generators (V-GCoord5) for more customization of 3D object vertexes.

- Added V-Boids instrument with simple fluid simulator.

- Added V-AuEnvelope audio effect for tracking beats and generating envelopes based on Live’s tempo

Ver 0.5

- Solved a bug with effects and mixer due to window automatic pop-up (after liveset save/recall - mixers have now devices a screen toggle button).

- Added a mixer version (V-GL-RenderMixer4RGB-Out.amxd) for an additional output stream (useful for instance to do recording to disk by V-Rec)

- Several video clip playback devices (V-VideoRack, V-SliceBeat, V-GL-Rota, V-GL-Slab with "auto" suffix name) support auto connect mode (thanks to ChrisG).

- Change in LFO modulation strategy: LiveAPI LFO modulation added with the V-LiveMod new device (for CVsend to LiveAPI modulation)

- OpenGL based effects revised: no additional window required, better readback support.

- Android support using Matt Benatan's Max/MSP control app (available at http://mattbenatan.com/) with V-DroidSens2CV and V-DroidCtrl2CV devices

- V-Joy2CV device added for support of HID devices (Joysticks, Gamepads, etc.) sending a CVsend as output (V-CV2MIDI can be used to transform this in MIDI).

- Several new OpenGL 3D tools (V-GCoord3, V-FX-GLOpScalar, V-GMove3DWJoy, V-GMove3DWPad) for 3D coordinates manipulation and space navigation

Ver 0.4

- Review of all devices for compatibility against Ableton Max for Live Production Guidelines v0.6:

- All GUI parameters are labeled in a meaningful way.

- All significant GUI parameters (type float and/or int, according to M4L constraints) can be mapped and automated for clip envelopes.

- Many devices have useful info view text (thanks M._TriangelKlang for the help)

- All devices behave politely and do not spill into Undo history

- All devices are frozen and directly compatibile with Mac OSX and Windows OS

- All V-Input devices have rate which can also go into negative values

- All V-Input devices efficient with memory and use both drag'n'drop and click-to-read ways to open clips

- V-VideoRack supports thumbnails.

Ver 0.3.1

- V-VideoRack and V-SliceBeat support absolute paths.

Ver 0.3

- V-VideoRack rewritten with better performance and memory usage.

- V-VideoRack supports chaining of multiple V-VideoRack, by using pad MIDI "offset" (see example08 liveset)

Ver 0.2.1

- V-VideoRack added

- V-Cam made cross platform (Mac and Win OS)

- Mixers and V-Out devices support ESC for existing fullscreen, "Num-Pad-0" for disable

- V-SliceBeat enhanced

- V-FX-GL-Rota-xxx removed theta issue on Live's undo

- V-FX-Feedback2 added

Ver 0.2

First public release

Here you can download V-Module latest version. Please read the "How to Install V-Module.txt" instruction file first.

Here a user manual.